Guide to Chatbot Scams and Security: How to Protect Your Information Online and at Home

The realistic nature of automated chat features and devices may make people comfortable sharing their personal information. However, criminals often use these popular technologies in phishing schemes.

Automated chatbots have various uses today: ordering pizza, checking bank account balances, or mental health therapy. One in three U.S. men even use ChatGPT to help them with their relationships.

The uses for chatbots are nearly endless. But, businesses primarily use them to engage their website visitors and increase their online purchases. This is one of the main drives of the predicted 25 percent year over year growth in the market size for chatbots.

As businesses find more applications for chatbots, bad actors are also finding new ways to exploit chatbot users and steal their personal information. And unfortunately, most people are not aware of this threat. According to our recent study, 58 percent of adults said they didn’t know chatbots could be manipulated to gain access to personal information.

This guide will help you use chatbots safely and securely and show you how to spot a scammy chat before you share sensitive information.

Table of Contents

Online chat risks and vulnerabilities

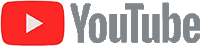

Although they benefit businesses and customers, there are certain risks and vulnerabilities to be aware of when using chat features on websites. Most people are not confident that these online chats are completely secure. In fact, only 11 percent were very or extremely confident that companies had sufficiently secured these chat features.

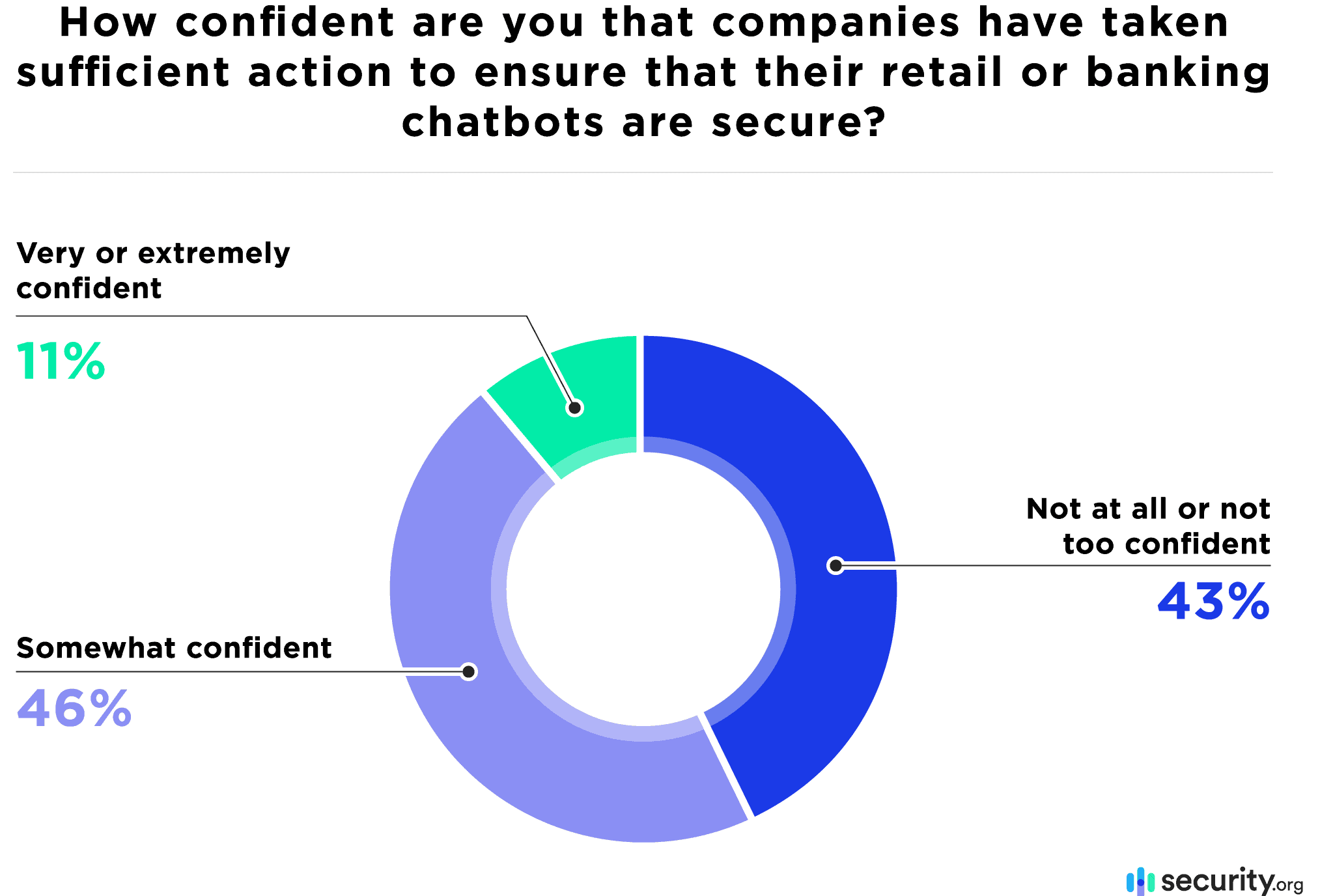

If customers would not feel safe placing an order with their credit card number over public WiFi networks, they should not when ordering through a chatbot, either. According to our research, people seemed to be more concerned about their information security in online banking chats than in online retailers’ chats. This makes sense, given the sensitive information associated with bank accounts that could be damaging if placed in the wrong hands.

However, even in retail shopping chats, criminals could access victims’ credit card information if a company has a data breach. If credit card or other personal data was shared and stored in automated chats, hackers could steal and manipulate it. No matter what chatbot you use, it’s essential to be vigilant and protective of your personal information.

Recent high-profile chatbot scams

Chatbot scammers often impersonate trustworthy brands when planning their schemes. According to Kaspersky Labs research, major brands like Apple, Amazon, and eBay are the ones that phishers impersonate most often. Here are a few examples of chatbot scams where bad actors pretend to represent major companies to obtain sensitive information from victims.

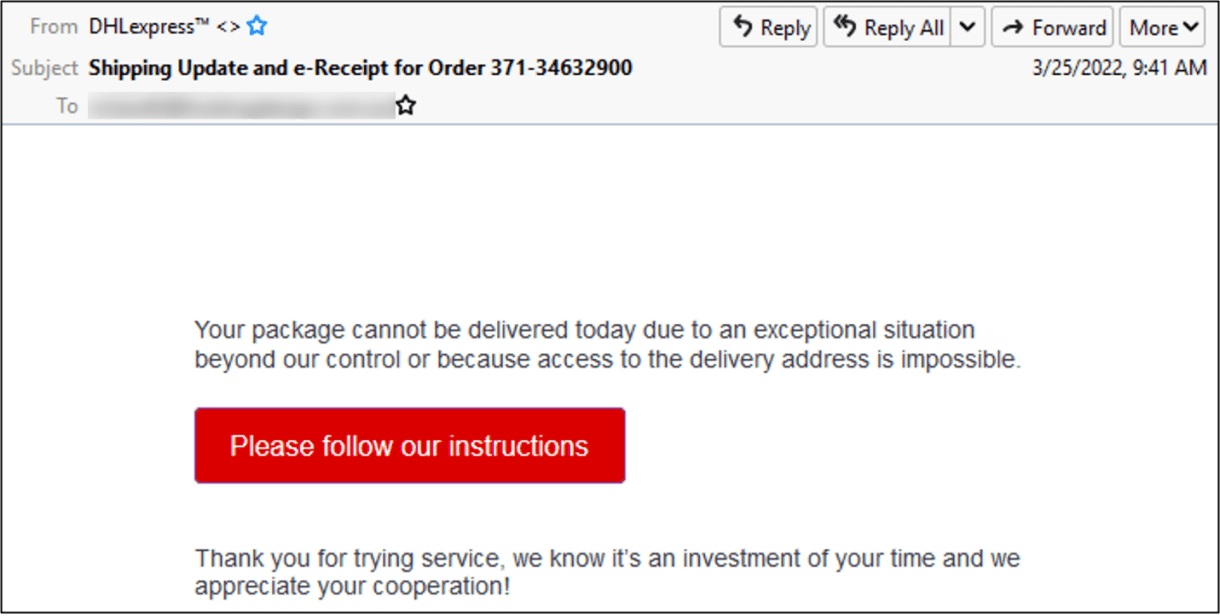

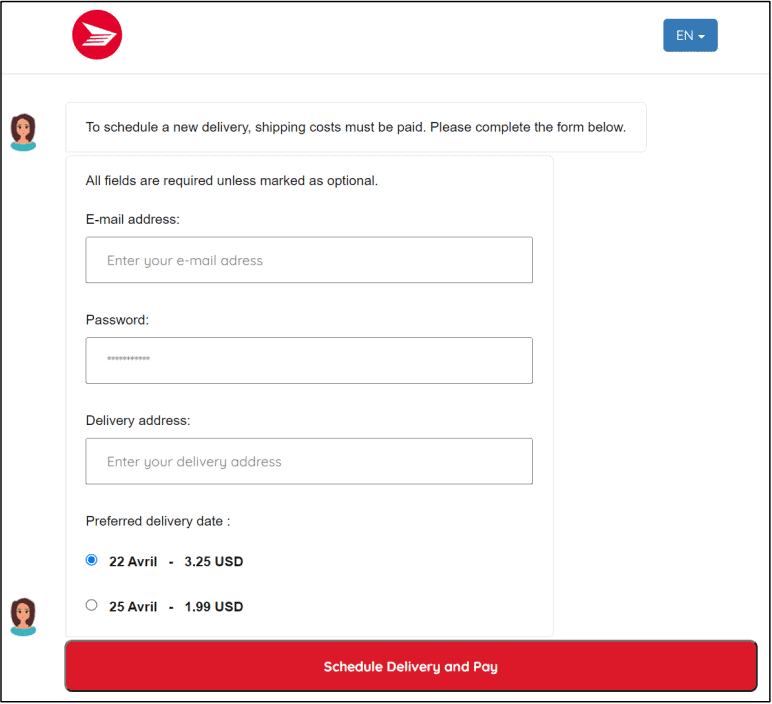

DHL Chatbot Scam

DHL is a courier, package delivery, and express mail service company. In May 2022, a chatbot phishing scam spread – but it did not start in chatbot form. Essentially, the scammers asked unsuspecting people to pay additional shipping costs to receive packages. Victims had to share their credit card information to pay the shipping charge.

First, the victims received an email about DHL package delivery problems. If they clicked on the email link, they were eventually directed to a chatbot. The chatbot conversation may have seemed trustworthy to some users since it included a captcha form, email and password prompts, and even a photo of a damaged package. However, there were a few tell-tale signs that this was a scam:

- The “From” field in the email was blank.

- The website address was incorrect (dhiparcel.com, not DHL.com).

Did You Know: Phishing scams don’t just end in your inbox. Cybercriminals have evolved their phishing scams to include text messages. Read our guide to phishing text messages called “smishing” to learn more. We’ve also created a guide on how to stop spam texts.

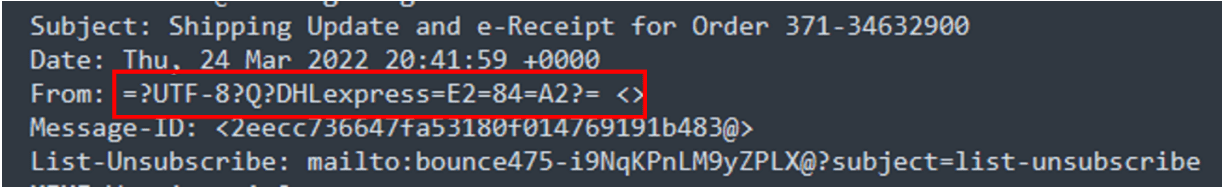

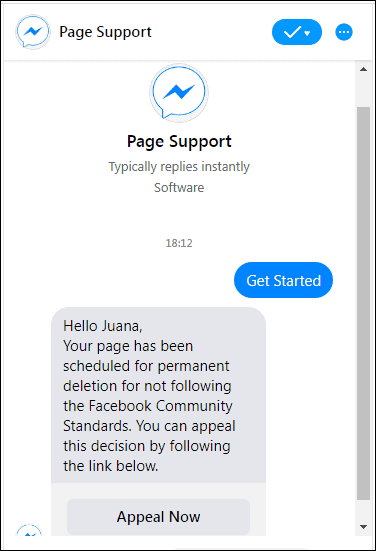

Facebook Messenger chat scam

Meta’s Messenger and Facebook have also been targeted in a chatbot scam. Some Facebook users received a fraudulent email explaining that their page violated community standards. Their Facebook account would be automatically deleted if they didn’t appeal the decision within 48 hours.

A link took unsuspecting readers to an automated support chat within Facebook Messenger. The chatbot directed them to share their Facebook username and password with the scammers, which was the scheme’s goal.

There were a few clear signs that this was a scam:

- The email’s sending domain and “from” address were not Facebook, Meta, or Messenger.

- The website was not an official Facebook support page.

- The Facebook page associated with the chatbot had no posts or followers.

- The chatbot prompted users to share their usernames and passwords.

How to stay safe while using chatbots

Chatbots can be hugely valuable and are typically very safe, whether you’re using them online or in your home via a device such as the Alexa Echo Dot. A few telltale signs may indicate a scammy chatbot is targeting you.

Here are a few ways to stay safe and spot fraudulent chats while on the internet:

- Use chatbots only on websites you have navigated to yourself (do not use chatbots on websites you reached by clicking on links in suspicious emails or texts).

- If you receive an email with a link to a chatbot, always verify the “from” address before clicking on any links. For example, if the sender claims to be from Walmart, the from address suffix should match Walmart’s web address.

- Only click on links in texts or emails you were expecting or from senders you know.

- Ignore tempting offers and incredible prizes, especially when they appear out of nowhere. Chatbot scams typically originate from pop-ups and links from websites, emails, and text messages. For example, the Apple iPhone 12 scam started with a text message. Text messages confer a significant advantage over email scams in that shortened URLs hide questionable URLs and abbreviated or incorrect grammar more easily.

- Follow the maxim that if a prize or offer seems too good to be true, it is.

- If you receive a suspicious message, do an internet search for the company name and the offer or message in question. If the offer is real and valid, you will likely find more information about it on the company’s website. If it is fake, you might find news reports about the scam or no information at all.

- Use two-factor or multi-factor authentication to further protect your accounts from unauthorized users.

- Be aware of unknown or random requests for your payment information or personal details via chatbots. If it’s a real company, they will not ask you sensitive questions via chat.

- Always keep your security software and browsers updated.

- Be vigilant about suspicious chatbot messages and report any malicious activity.

- Refrain from using unsecured or public Wi-Fi networks to access your sensitive information.

If you receive frequent texts from unknown numbers that contain suspicious links, you can adjust your carrier settings to filter out spam calls and texts. You can also download mobile apps to block shady numbers and texts. Forward any scam text messages you receive to the Federal Trade Commission (FTC) at 7726, so they can track and investigate the fraud.

AI home assistant bot risks and vulnerabilities

Chatbot threats aren’t only online – most Americans have already opened their homes to very similar interfaces. The same conversational AI powering internet chatbots is coded into virtual personal assistants (VPA) like Amazon’s Alexa, Google’s Home, and Apple’s Siri.

More than half of respondents in a survey we conducted own an AI assistant, meaning that 120 million Americans share personal information with such devices regularly. Amazon’s Alexa is the most popular model today.

Home assistants offer convenience by handling household operations with an understanding of our personal needs and preferences. That functionality also makes the interfaces a risk to privacy and security. Appropriately, not everyone trusts them.

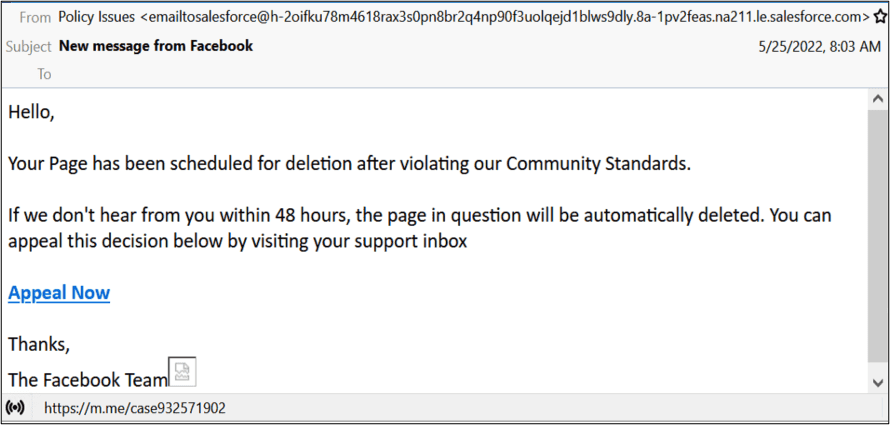

More than 90 percent of users have doubts about home assistant security, and less than half have any level of confidence in them.

This skepticism is justified. Voice-activated assistants lack many protocols that can foil a browser-linked scambot. Rather than requesting password logins through verifiable web pages, assistants can accept commands from anyone without visual confirmation of their remote connections. This process allows for fraud on either end of the equation. Including always-on microphones completes a recipe for potential disaster.

Some VPA security lapses are borderline comical, like when children commandeer them to buy toys with parental credit cards. Other stories feel far more nefarious. For instance, Amazon admits to listening in on Alexa conversations even as Google and Apple face litigation for similar practices.

Third-party hacks are particularly frightening since virtual assistants often have access to personal accounts and may be connected to household controls (lighting, heating, locks, and more).

>> Dive in: What Is a Scam?

Outside breaches of AI assistants usually fall into one of the following categories:

- Eavesdropping: The most basic exploit of an always-on microphone is turning it into a spying device. Constant listening is intended as a feature instead of a bug: devices passively await “wake words,” manufacturers review interactions to improve performance, and some VAs allow remote listening for communication or monitoring purposes. Hijacking this capability would effectively plant an evil ear right in your home. Beyond creepily invading your privacy, such intrusions could capture financial details, password clues, blackmail material, and a way to confirm that a residence is empty.

- Imposters: Smart speakers connect customers to services via voice commands with little verification, a vulnerability that clever programmers abuse to fool unwitting users. Hackers can employ a method called “voice squatting,” where unwanted apps launch in response to commands that sound like legitimate requests. An alternate approach called “voice masquerading” involves apps pretending to close or connect elsewhere. Rather than obeying requests to shut down or launch alternate apps, corrupt programs feign execution, then collect information intended for others. According to our study, only 15 percent of respondents knew of these possible hacks.

- Overriding: Several technological tricks can allow outsiders to control AI assistants remotely. Devices rely on ultrasonic frequencies to pair with other electronics, so encoded voice commands transmitted above 20Khz are inaudible to humans but received by compliant smart speakers. These so-called “dolphin attacks” can be broadcast independently or embedded within other audio. Researchers have also triggered assistant actions via light commands – fluctuating lasers interpreted as voices to control devices from afar. Eighty-eight percent of AI assistant owners in our study had never heard of these dastardly tactics.

- Self-hacks: Researchers recently uncovered a method to turn smart speakers against themselves. Hackers within Bluetooth range can pair with an assistant and use a program to force it to speak audio commands. Since the chatbot is chatting with itself, the instructions are perceived as legitimate and executed – potentially accessing sensitive information or opening doors for an intruder.

Ways to secure your AI home assistant devices

Manufacturers issue updates to address security flaws, but when personal assistant divisions like Alexa lose billions of dollars, such support is likely to be slashed. Luckily, there are simple steps that consumers can take to help safeguard their devices.

- Mute the mic: Turning off an assistant’s microphone when not actively in use may reduce fun and convenience (preventing one from randomly soliciting a song or weather report) but eliminates access to many hacking attacks.

- Add a PIN or voice recognition: Most AI assistants can require voice-matching, personal identification numbers, or two-factor authentication before executing costly commands. Activating these safeguards keeps a device from obeying unauthorized users and stops children from making purchases without authorization.

- Delete and prevent recordings: Users can revoke manufacturers’ permission to record or review audio commands. Within the “Alexa Privacy” (Amazon) or “Activity Controls” (Google) settings, owners can find the option to disallow saving or sending recordings. Apple devices no longer record users by default, though owners can opt-in and allow it.

- Set listening notifications: Configuring assistants to emit audible alerts when actively listening or acknowledging commands provides a reminder when ears are open…and can uncover external ultrasonic or laser attacks.

- Disable voice purchasing: Impulse buying with only a sentence spoken aloud is a modern marvel that’s rarely necessary. Given their security shortcomings, allowing virtual assistants to access or execute financial transactions is risky. Have the assistant place items on a list instead, then confirm the purchase from a more secure terminal. Users might even save money by reconsidering late-night splurges.

- Keep your firmware updated: Make sure your AI assistant devices are kept regularly updated with the latest software. This helps your firmware fight against new attacks created to hack devices with outdated software.

- Keep your Wi-Fi network secured: Don’t just assume your Wi-Fi network is unhackable. Use a strong password and enable WPA3 encryption if possible.

- Limit third-party apps: Don’t just connect every single third-party app possible. Only enable the ones you trust.

Additionally, one must remember to follow the baseline safety protocols inherent in any online device. Some consider VAs family members rather than gadgets, yet it’s still critical to keep firmware updated, use strong passwords, and connect them only to secured personal routers.

Dating app chatbot scams

Beware of chatbot scams if you’re looking for love on dating apps. From January 2023 to January 2024, there’s been a 2087 percent increase in scammers using chatbots and AI-generated text on dating apps.

Scammers use bots to sign up for new accounts and create fake dating profiles. This occurs on a massive scale and reported romance scams in 2022 cost about $1.3 billion in the United States. You may be especially at risk if you’re between 51 and 60 years old, according to Barclays research.

AI technology such as ChatGPT is allowing scammers to be more successful at making conversation with potential victims. Still, there are signs that you may be talking to a bot or a scammer relying on AI to make conversation:

- The profile photos are “too good to be true” (you can try Google Images to see if the pictures are elsewhere online)

- The profile is bare bones and has few details

- The user asks to move the conversation off the app as soon as possible (before the app kicks the scammer off due to user reports or AI detector tools)

- You notice personality and tone inconsistency, which is common when multiple scammers converse through a single profile

- They refuse to do video or phone calls

- They send messages at odd times of the day

- They ask you for money

- They avoid answering personal questions or provide vague responses

- They exhibit poor grammar or unusual phrasing despite claiming to be native speakers

- They send links or attachments without context or explanation

As recently as a few years ago, one person could run 20 dating scams per day. With automated chatbots now, though, it’s possible to work hundreds of thousands of scams at once.

>> Related: Is ChatGPT Safe?

Conclusion: What you should never share with chatbots

Most of the time, chatbots are legitimate and as safe as any other apps or websites. Security measures like encryption, data redaction, multi-factor authentication, and cookies keep information secure on chatbots. If you accidentally give a legitimate chatbot your Social Security number or date of birth, redaction features can automatically erase the data from the transcript.

That said, you should tread carefully when sending personally identifiable information and other types of sensitive data. Sometimes, sending PII is unavoidable since interacting with a chatbot is similar to logging into an account and submitting PII yourself. Here are some personal data points you should not send via chatbot:

- Social security number

- Credit card numbers

- Bank information or account numbers

- Medical information

At a minimum, do not overshare information. Refrain from sending or volunteering extra information beyond what is required. For instance, don’t give your complete address if the chatbot asks only for your ZIP code. Never share information you would ordinarily not need to, and think carefully before sending any personal information online. For example, you would never have to share your Social Security number to check on a package delivery or get your bank balance.